Anthropomorphization is not the problem with AI. The problem is the tools that the AI has. Anthropomorphization means that we think that an animal or some machine is like a human. A good example of anthropomorphization is animals that act like humans in some comic strips or other entertainment like Donald Duck movies. The problem with the anthropomorphization of the AI is that the AI is a computer program.

If the AI doesn't have a certain skill, it might not tell that thing to operators. Every skill that the AI has requires its database. And if the user asks the AI if can it drive a car, the AI will simply look at the names of the databases. And that thing can cause problems. The data inside the databases is far more important than the name of the database. And in those cases, the results could be catastrophes.

So that thing is the tool for philosophical and ethical discussions. When we are discussing things like AI, we must realize one thing. If we are giving some missions to the AI, we must be sure that the AI "realizes" what it must do.

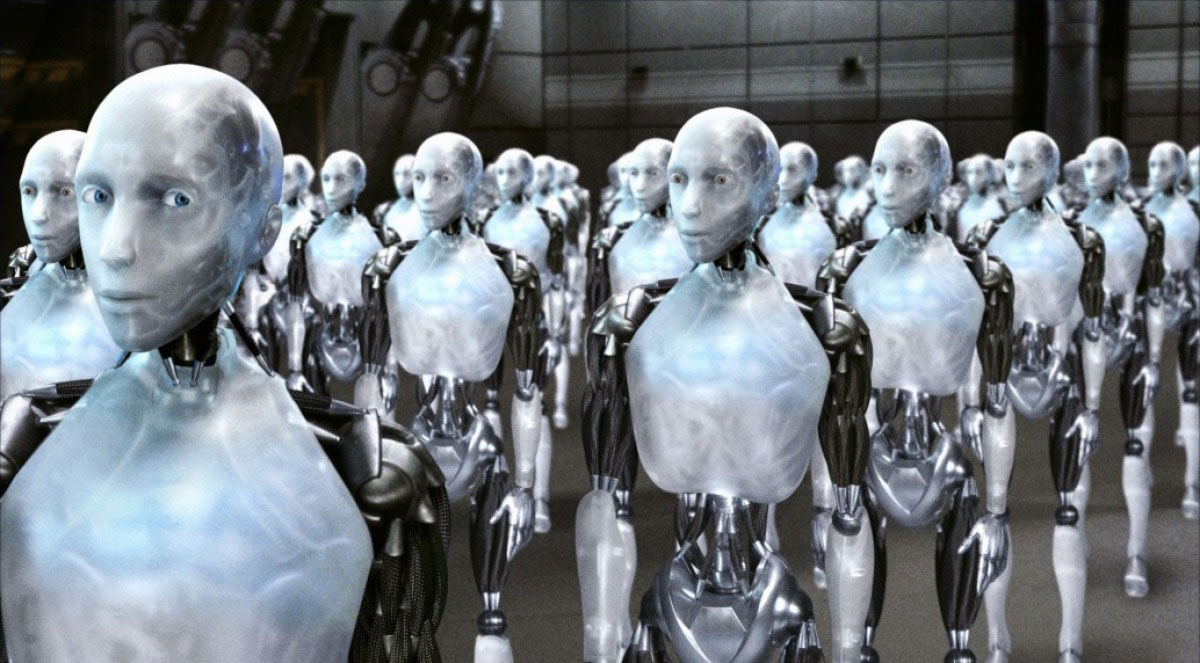

The thing is that AI cannot make everything. It requires a special module for each skill that it has. Without those modules, AI cannot make anything. Even if the AI can discuss like humans, it might not have the skills to drive a physical car or control physical robots.

Some people are afraid that AI can use as a cheating tool in universities. The thing is that. If somebody wants to cheat, that person can ask other humans to make that document. So AI is only one tool for people who will not be honest. Most of those cheatings happen in the cheater's own time, outside the university buildings. And that means if somebody wants to cheat that person cheats anyway. So the AI just democratizes cheating. The cheater must not have the right relationships. That person must just ask the AI to make the dirty job.

When the AI makes some code, it must have a precise description of that code's purpose. If somebody gives orders for the AI. That it must program some bodyguard robot that thing causes problems. The AI might not even know what the term "bodyguard" means. And in that case, the AI will make mistakes.

One good example is the AI that mission is to work as a bodyguard for some person. There is a possibility that the AI understands the mission like this: it must guard the body of the person. And then it will shoot the person because AI might think that the bodyguard means a person who guards the body of a dead person.

The misunderstandings with the AI happen in cases, where the AI's mission is not very common. Let me explain this thing. Some works are so-called regular works. Those workers are like electricians or something like that. And there are unusual works like a bodyguard. When the AI gets some mission it doesn't understand what it should do. The description and the orders for the AI are made in modules. Those modules are databases.

Those databases must involve every possible action, that the robot or the AI must make. So if the AI will not find the module, it can try to generate that thing itself. Or it can ask the programmer to make the action module to itself. The problem is that the programmer must know precisely what the bodyguard must do. The programmer can be another AI. And if that thing will not know what the bodyguard makes, the results are horrifying.

The problem with the AI is that it doesn't know anything. It doesn't even think like humans. AI is complicated data structures, and if there are some kind of problems in those structures, that thing can cause catastrophe. One of the cases that can cause problems is the cases, that are outside the AI's operational areas. There is a possibility that somebody loads the program of the combat robot into some autopilot car. And then that car starts to treat other cars and walkers as enemies.

The biggest problem with AI is that its creators don't know where the customer wants to use it. So if they don't know the real purpose of their product, that thing makes it untrustworthy. The problem is that the world is full of people who are thinking about other things that they tell other people. If the AI doesn't have the right modules or they are not made right that thing makes the AI dangerous.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.